Chapter 4. Tuning the operating system 117

Draft Document for Review May 4, 2007 11:35 am 4285ch04.fm

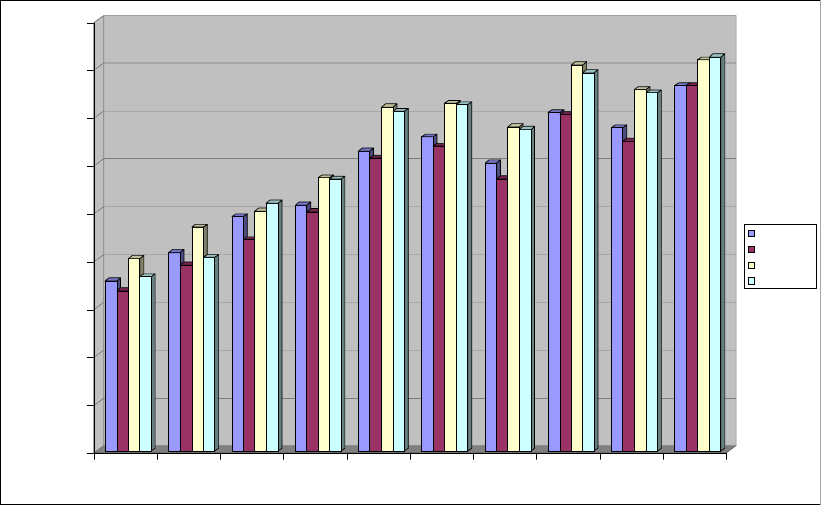

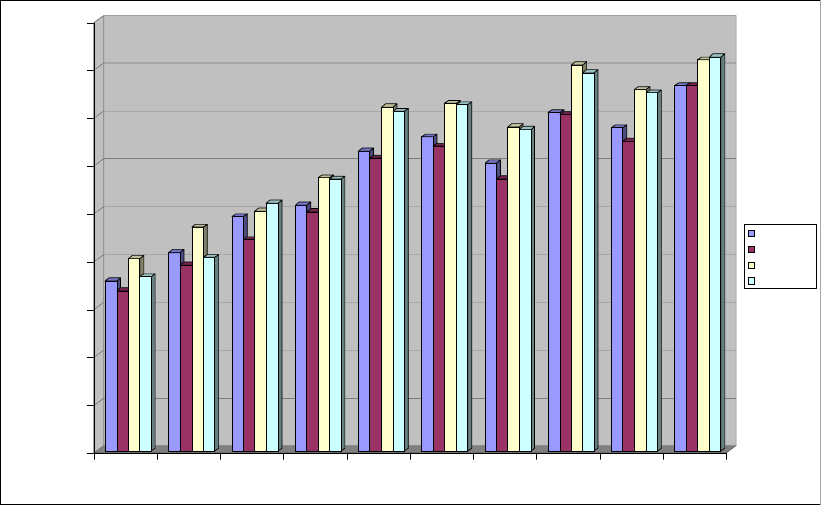

cases the performance of the anticipatory elevator usually has the least throughput and

the highest latency. The three other schedulers perform equally good up to a I/O size of

roughly 16kB at where the CFQ and the NOOP elevator begin to outperfom the deadline

elevator (unless disk access is very seek intense) as can be seen in Figure 4-7.

Figure 4-7 Random read performance per I/O elevator (synchronous)

Complex disk subsystems

Benchmarks have shown that the NOOP elevator is an interesting alternative in high-end

server environments. When using very complex configurations of IBM ServeRAID or

TotalStorage® DS class disk subsystems, the lack of ordering capability of the NOOP

elevator becomes its strength. Enterprise class disk subsystems may contain multiple

SCSI or FibreChannel disks that each have individual disk heads and data striped across

the disks. It becomes be very difficult for an I/O elevator to anticipate the I/O

characteristics of such complex subsystems correctly, so you might often observe at least

equal performance at less overhead when using the NOOP I/O elevator. Most large scale

benchmarks that use hundreds of disks most likely use the NOOP elevator.

Database systems

Due to the seek-oriented nature of most database workloads some performance gain can

be achieved when selecting the deadline elevator for these workloads.

Virtual machines

Virtual machines, regardless of whether in VMware or VM for System z, usually

communicate through a virtualization layer with the underlying hardware. Hence a virtual

machine is not aware of the fact if the assigned disk device consists of a single SCSI

device or an array of FibreChannel disks on a TotalStorage DS8000™. The virtualization

layer takes care of necessary I/O reordering and the communication with the physical

block devices.

CPU bound applications

While some I/O schedulers may offer superior throughput than others they may at the

same time also create more system overhead. The overhead that for instance the CFQ or

deadline elevator cause comes from aggressively merging and reordering the I/O queue.

0

20000

40000

60000

80000

100000

120000

140000

160000

180000

kB/sec

4 8 16 32 64 128 256 512 1024 2048

kB/op

Deadline

Anticipatory

CFQ

NOOP